If you’re wondering where I’ve gone off to, you’re one of about fifteen people who actually read this blog.

Also, I’m now writing for Nerd Or Die. You can find my stuff there.

If you’re wondering where I’ve gone off to, you’re one of about fifteen people who actually read this blog.

Also, I’m now writing for Nerd Or Die. You can find my stuff there.

Along with about half the developed world, I watched the iPad 3 announcement with keen interest. I’m not an Apple fan, but neither am I one to simply dismiss a new product without knowing anything about it. Nevertheless, after watching video and a live blog feed, I felt the same way I felt after the iPad 2’s debut: disappointed.

I feel it necessary at this point to note that I do not simply hate Apple for the sake of hating Apple (or hipsters, turtlenecks, or those newfangled MP3 players for that matter). Shortly after the first time I used a PowerBook G4 running OS X, I was very nearly converted wholesale to the brand. I’ve spent most of the past five years doing my damnedest to defend Apple in every way. And I have to be honest: there is no defense left. There is no rationalization to justify their business practices, either in the marketing realm or in the way they treat app developers. That being said, I still go into every new product launch with some hope that they will redeem themselves. And so far, every new product launch of the past three years has left me jaded and frustrated. The same pattern has repeated itself twice now with this release of the iPad. The iPad 3, or the “new iPad” as Apple is calling it simply, has followed its predecessor with glam, flash and pizzazz in spades. But when it comes to the actual meat and potatoes of the whole affair, this is a rather slim meal.

The new iPad is light on new features. Though Tim Cook and company were able to pack a 90-minute presentation with content and show dozens of new things available on this iPad, much of this was fluff, not unlike a 10-page essay written by a high school sophmore which only contains about 2 pages of actual content. Most of the excitement is focused on the tablet’s screen, a so-called Retina Display. While a true Retina Display clocks in at 336 pixels per inch, the iPad’s is only 264, about 75% that of its little brother (a true Retina-armed iPad would have a 2500×1900 display resolution, a very expensive proposition considering the vehicle). But Cook is more than happy to say this is “good enough to call it a Retina Display.” This tells me the Retina name is becoming little more than branding, used to create hype and agitation amongst its legions of sworn defenders. Moreover, the actual merits of the Retina Display are at best debatable, as the visual acuity of a human eye can still resolve pixels at the intended distance. But Apple expertly used this, and a few other features, to make the whole thing much more than it really was.

Almost every other new aspect of the iPad is new only to Apple. The quad-core graphics have been in use by competitors for over a year now. The 1080p camera, while always an enjoyable feature, has also been done, although to their credit the software support it is nothing to scoff at, in particular the image stabilization. The camera, however, lacks an LED light, essential for taking pictures when you don’t have that convenient star on your side of the Earth. 4G is also a worthy advancement, although whether this is actually worth it has yet to be seen, as Apple’s history with antennae is questionable. Cook also claimed that the new 4G model still maintained the same battery life with only a minute increase in thickness and weight–another assertion that will need to stand the test of the real world in a month or two.

And, once again, the iPad still lacks two things the entire remaining computer world doesn’t: SD card support and USB support. This one still baffles me. No tablet should be without either of these. Memory cards make transfer of large data files simple (and, in some cases, more secure) and allow for expansion of existing memory. Without this, iPad users are stuck with whatever the iPad comes with–64GB may seem like a lot for a tablet now (assuming you can afford it), but if you plan on watching lots of movies on it you will quickly find yourself wanting more. And that’s when Apple will probably release a 128GB version, no doubt at an exorbitant premium.

Phil Schiller was eager to point out that Android apps on a tablet “[look] like smartphone apps blown up.” Lots of wasted space and too-large text were displayed on the gigantic screen behind him as he picked apart the Android designs. Notably, he cherry-picked only a couple apps to compare, and did not run any comparisons against Windows Phone or Blackberry apps. Badly designed iOS apps do exist, however–not even Apple’s perfect, flawless operating system is immune to the mistakes of developers. This is clearly another shot at Android, the OS that “ripped off Apple,” even after Apple ripped off Android.

Possibly the most absurd part of the presentation related to gaming. Opening the segment, Cook stated that in internal polls, the iPad 2 was the preferred gaming device of the majority of households, even being “preferred over home consoles.” Without overstating it, this is laughable. Tablet gaming has its uses, but it has just as many limitations, namely that it is difficult to come up with a comfortable control scheme without the user’s hands blocking significant parts of the screen–and then there is the prospect of holding a half-pound object aloft while also attempting to tap away with reasonable accuracy for any considerable amount of time. No household with real gamers would consider the iPad superior to the PS3, Wii, or even 3DS. Epic Games’ Mike Capps appeared onstage and demoed a couple games for the new iPad, throwing his unconditional support behind it and even going as far as to tell the audience that it is a superior platform to the Xbox 360. Considering Apple’s history of stabbing developers in the back, it would be a supremely satisfying irony if this fate were to befall Epic.

Once again, Apple has turned a lot of nothing into something revolutionary. No doubt millions will mob Apple stores throughout the world to get their own iPad 3, stepping over their own mothers to take out loans against their twice-mortgaged homes just to own one. What concerns me most is the thought that this model might catch on. While many products are already being called iPad clones, and many claims are thrown about focusing on who is copying Apple’s design methods, the fact remains almost all of these competing products have more features and are capable of more functions than any iPad on the market. When other manufacturers start realizing that they can add marginal improvements and bill them as revolutionary progress on par with the invention of the transistor…I shudder to think where the market will go after that.

I’m now convinced that Sony is determined to come up with the most sadistic way to store data possible, and see if people still buy it. It’s like they’ve created their own tech-centric version of Opus Dei that drives its followers to inflict suffering upon themselves in some futile chase to gain the approval of God.

Let’s look at Sony’s recent history of storage formats, shall we?

Start in 1975, with their Betamax casette. While a significant jump of quality over the competing VHS, Beta had two major drawbacks. The first was limited capacity, a logical expense for the tape’s higher resolution (roughly 50% greater than VHS). The second was much more detrimental–Sony’s jealous and suffocating guarding of the Betamax technology. Few outsiders were allowed licenses to produce the casettes and players/recorders, hindering the supply of releases and increasing the cost. Beta died a slow, lingering, and unsurprising death at the hands of the much more widely available VHS.

In 1992, Sony decided to take another swipe at a format completely under their control, and the MiniDisc hit the market, an attempt to combine the best attributes of magnetic and disc media. Sony again failed to open the technology to consumers, producing only a handful of MiniDisc read/write drives (not counting the MiniDisc players themselves, some of which could be used to write data to cartridges). While it was (sort of) a hit in Japan, MiniDisc failed to gain any traction in America or Europe.

Skip ahead another decade, and Sony’s introduction of the Universal Media Disc to coincide with its PSP system. While it had promise with its high capacity of 1.8GB, Sony again closed off avenues of production, amid piracy concerns. Intent on making a portable disc-based gaming system, they designed it with a very similar physical format to the MiniDisc, encasing the actual disc in protective plastic that turned out to be rather flimsy and unenduring. Once again, Sony’s attempt to stifle piracy failed, as it was relatively easy to use a PSP as a reader connected to a computer and directly rip images of games off the UMDs themselves.

Meanwhile, the Memory Stick was pushed to market in 1998, for use in Sony’s digital cameras and the (late) Clié PDA. While a formidable technology in terms of performance, once again Sony stifled production in the name of control and the Memory Stick was limited almost entirely to use in Sony’s products alone.

The company managed to change their fortunes with the breakout Blu-ray technology. Designed as a successor to DVDs, Blu-ray competed directly with the lower-capacity, but cheaper, HD DVD. This time, Sony did not fully control the format, working to design and implement it with over a dozen major manufacturers to give it a boost in market penetration. Beyond high-definition movies and mass storage, however, Blu-ray has seen little innovation.

Now Sony is preparing to paint themselves into a new corner with the looming Vita. The new handheld will make use of a proprietary memory format and a new storage format for the games themselves. Sony has already ommented that memory use will be highly compartmentalized–games that run on internal memory will not have access to external memory, and vice versa. Furthermore, the use of yet another controlled format will only drive the market up–it has already been announced that 32GB will cost $120, as opposed to a same-capacity microSD card which costs a third that; for a more fair comparison, look at 32GB Memory Stick Pro Duos, which are in the $80-90 range. This is not going to persuade many people to buy memory at such a high cost. Sony’s fear of piracy is understandable, but they could have saved consumers, and themselves, untold costs if they had simply went with an established memory format instead of designing and implementing their own. We have yet to see how this one holds up, but I’m not holding my breath.

I like to think of myself as having been a Nintendo fanboy since at least 1991, and I still prefer my Nintendo consoles to my others, for various reasons. But what’s been bugging me lately is their library. Not their new releases, which we all know are somewhat limited. No, it’s their overall library on the Wii and 3/DS/i that I’m talking about, specifically the digitally-distributed sort.

Nintendo started off the Wii launch with its most promising venture–its entire collection of previously-released games. The Virtual Console, paired with the Wii Shop Channel, opened the door for classics of all shapes and sizes to pour through the floodgates, bringing a wave of nostalgia to longtime fans–and bringing some dejected oldies to the attention of a new generation of players.

But as of right now those floodgates remain in a rather unfortunate state. Of late I’ve noticed a distinct lack of new releases on either the Wii Shop Channel or the eShop on the 3/DSi. Okay, I noticed it several months ago, but this time I decided to run some numbers. They don’t make for a great outlook.

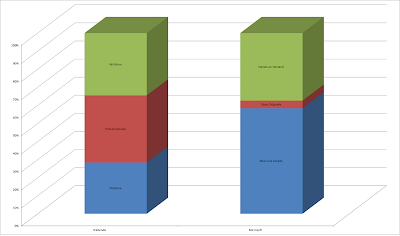

|

| Total Nintendo Download Releases. |

At present, 392 games are available on the Wii’s Virtual Console service. This amounts to roughly 3 new games every two weeks since the first games were pushed out on November 19, 2006. This doesn’t seem so bad in the end–400 games is a lot to choose from. But this is paltry compared to the vast libraries Nintendo has built in the past three decades. Just counting the NES, SNES, and N64, about 1,958 games have been released over the (many) years, depending on where you get your list. This number is highly debatable, and it is impossible to build a comprehensive list of all releases, so I will round down to 1,900 to be safe. Out of this number, Nintendo has tapped a mere 20% of the product pool, as it were. But that’s not the entire library, either. Games for the Master, Genesis, TurboGrafx, Neo Geo, Commodore 64, and arcade machines have also been made available. While it is impossible to account for all games released on the last two platforms, all the other systems total a count of nearly 3,500 games. This reduces VC’s library to a mere 11% of its total potential.

The Virtual Console has also been made available on the 3DS Shop. Game Boy, Game Boy Color, and NES games have been pushed to the platform, but so far less than fifty titles are available. The GB and GBC alone open the possibility for over 1,100 games. I’m hoping they see more potential in it than they have previously.

Moreso than this, Nintendo has neglected some of the aforementioned systems on the 3DS Shop. The Master, Genesis, and SNES would work beautifully on the 3DS–in fact, I would be far more likely to play SNES games on the 3DS, because I’m not keen on using the Classic Controller with my Wiimote. Interestingly, the Virtual Boy could also be implemented on the 3DS, making use of the true 3D screen, and possibly even with full-color graphics. This is something I would like to see Nintendo do (but that’s a very long list).

|

| Note: This chart does not include PSN. |

Now for the damning comparison. Nintendo’s efforts have foundered compared to its two noncompetitors. While Microsoft has made fewer overall games available on Xbox Live, the most original titles are seen there. PSN features the fewest original works, possibly due to the burden of cost being shifted to the developers, but Sony has made oodles of games available for download via the service. The chart below visualizes the release rate for Nintendo and Microsoft only. Sony is not accounted for, since virtually all PSP games, a vast proportion of PS3 games, and considerable original titles are available for download. In addition, TurboGrafx and Neo Geo games are available on PSN, in greater numbers than what Nintendo has to offer, along with Dreamcast games.

|

| A little perspective. |

While the graph shows Nintendo in a solid lead over Microsoft, it’s worth noting Microsoft has released fewer than 40 of its original Xbox games on Live. Both Xbox Live and PSN are making much more headway with original works, and have fostered better connections with indie developers. While is isn’t surprising given Nintendo’s history with third party developers, that doesn’t make it any less dismaying. Nintendo is only leading the pack with sheer numbers of regurgitated titles, instead of working with independents to help create innovative and quirky motion-controlled (or 3D) games that could revitalize their catalogue.

If Nintendo’s long-term strategy is to lean on its golden oldies, so be it. But they better grab an oar and start paddling, because the propeller has long since given out. If not, they better start building a whole new boat, and set sail post haste, because their rivals have seen much more ocean.

I’ve not been the biggest fan of the adjustment succession of the Modern Warfare games of late. Don’t get me wrong, I’m not the hipster MW hater much of the internet has come to be, hating the franchise simply for the sake of hating the franchise. I loved the first game, not least because it had a nicely executed OHSHI- moment that really made the player confront mortality, a rarity in shooters. The second game changed the main character into something of a superhero, gunning down enemies in a James Bond-style chase down a snow-laden mountainside and surviving a knife wound to the chest and still managing to use the same knife to kill an enemy.

Modern Warfare 3 does a better job of using multiple viewpoints to tell the story, including one segment in which all the player does is literally walk a few feet before that character dies in a sudden terrorist attack. But I have other fish to fry.

I would say that Activision is worrying me with the way this series is going, but that would be something of an understatement. So far little progress has been made with it, other than innovative storytelling. The games use virtually the same engine, with only minor tweaks (though Infinity Ward claims they are major advancements on par with the invention of the third axis). Modern Warfare 2 and 3 are little more than glorified map packs–indeed, several of the maps are virtual copies taken from previous games, and many map structures appear to have been copypasted into “new” maps. About the only part of the engine that shows improvement is the lighting, and even that is debatable, as shadows are blocky and pixellated, and roughly the level of quality I would expect on a low-end Wii game.

|

| Find the differences. I dare you. |

Then there’s Elite. This aspect of the game worries me the most. The idea is this: you pay a subscription fee, and you get access to all new content–map packs, weapons, whatever–released during the subscription period. Considering Activision has held a pretty strong record of releasing maps for a rough price of $5 apiece, this sounds like a great way to save some money.

I’m skeptical that this will actually be to the players’ advantage, even ignoring the fact that this is Activision. For Black Ops, the publisher managed to push out four map packs in a year’s time, which at $15 a pop would actually have justified a subscription service such as Elite. But this is not a pace they’ve kept with other games. Even were this not the case, Elite creates an incentive for the publisher to go slow: the slower they push out content, the more they milk the subscribers for. And again, being Activision, I can absolutely see them pumping the teats dry.

Already cracks are showing in Elite’s armor. The service has not been active since the game’s launch a full week ago, with Activision blaming the outage on heavy use overloading the servers. Even more, they have moved the PC release of Elite into the “indefinite” category, and it’s unlikely the service will ever be available to the master race. This will force the PC consumers to buy map packs as they are released, and with a minor price increase, or by breaking packs up into fewer maps or less content each, this opens up another hose through which they can suction cash like so much crude at the bottom of the Gulf.

Today, Sony’s PlayStation devices are mostly free from the blight of open-source software. The XrossMediaBar organizes everything in a simple interface that is easy to navigate with a controller. Facebook, Twitter, Last.fm, YouTube and more have been integrated into the operating system, allowing enormous control at one’s fingertips.

But it wasn’t always like this.

Once upon a time, Sony was friendly to the homebrew community. The original PlayStation had a sizeable, if largely unseen, army of players and programmers making their own games at home to share with friends. In 1997, Sony capitalized on this by releasing the Net Yaroze, a development-kit PlayStation for home programmers to toy with. The Net Yaroze was popular, even being used in programming competitions in the United States, Europe and Japan. Many home-developed games appeared on demo discs of PlayStation-oriented magazines.

With the coming of the PlayStation 2, Sony contined the practice by producing a kit to install and run Linux from the unit, much in the same way as one might install it onto a home computer. While the intended purpose of this kit was also to spur development, it was mostly used to convert the systems into home servers of one type or another.

But fortunes began to wane when the PlayStation Portable arrived. Many attempts at homebrewing were consequently shut down by Sony, with each new system update bringing more frustration to the homebrewers. Most stack attacks and unsigned-software methods are now ineffective, limiting what could have greatly expanded the PSP’s market with cheap, simple, innovative games–the same mechanism that powers the smartphone software market.

|

| The rare Linux-armed Portable, seen in a laboratory. |

The death warrant was signed by the PlayStation 3 in 2010. Initially the console was distributed with the ability to run Linux without issue, as was specified in the original user license. But on April 1, 2010, Sony released a patch for the PS3 that removed the ability to install these operating systems, claiming it needed to close a loop in security caused by George Hotz, aka, Geohot, who had created a custom firmware using Linux. Lawsuits are now in progress against Sony, claiming that by doing this, the company violated its user license.

With the coming release of its newest product, the PlayStation Vita, Sony has revealed that games made to use external memory, or games running on its proprietary memory card, will not be able to access its internal memory. Conversely, games made to run on internal memory, or those downloaded from the PlayStation Store, will not have access to external storage. Any games that may need access to a memory card for saving will require that the memory card be present before the game is started. This prevents virtually all known forms of statck attacks or unsigned-code methods by closely limiting where the game software goes when in operation.

The future for homebrewing on Sony’s consoles is looking bleak. But if history has taught us anything, it’s that there is always a chance…that Sony may someday see the light and welcome its community back with open arms. But while it is entirely up to Sony to make this decision, one thing is for sure: the homebrewers will continue to operate, whether they are scorned or sanctioned.

Antique Marvels will return after the commercial with: Platformers.

So.

Origin is the hot topic of the day, as is Battlefield 3. The consumer whore I am, I decided I couldn’t wait to nab a copy of BF3 to play on launch day, but I’m wary of Origin (for obvious reasons). I’ve read statements from EA claiming that Origin would not be required for any of their games to run, which apparently have all been deleted from the internet–either that, or my hat isn’t working anymore, because apparently Origin is and will be required for (virtually) every game to run, including Battlefield 3. Oh well, I figured. I purchased it on Impulse in an attempt to get around this issue.

Not only did it not work, it backfired like a CIA operation. After a four-hour, 13GB download (yeah, my internet‘s not great), I attempted to run BF3 through Impulse and got…a game key. That was it. A small window in the top center of my screen showing my game’s key, which when clicked on caused Origin to launch. Origin then prompted me for said key, after which point it began downloading the game. Even after installing via Origin, I could not launch the game from Impulse.

What is the point of selling the game through a third-party vendor when it can’t be used by that vendor’s software? I understand EA’s want to have Origin on the market, and have its own social network. But it’s one thing to have Origin running in the background while the game runs, and entirely another to force someone to re-download the game even though it’s already been legitmately purchased through another storefront. Another four hours later, I was finally able to play the game.

|

| Thirteen gigabytes of wasted bandwidth. |

At this point I was confronted with the Battlelog, perhaps one of the most confusing elements in the game so far. Rather than having a server browser built into the game, which has been done for many years now, EA and/or DICE seem to think it was a good idea to make players manage their avatars and find servers from their internet browser, which then launches the game itself and loads everything up. This means that switching servers requires quitting the game and re-launching it, with no shortcuts in the middle. Meanwhile, the consoles have a fully functional server browser within the game, with almost all the options available to the PC players.

One argument I’ve heard is that this system allows for closer support for changes and patches. Isn’t this what Origin is for? Steam pushes updates to games automatically, so if Origin doesn’t do that, what’s the point? EA didn’t want to play Valve’s game of providing easy-to-get DLC and updates, so if they’re not going to use Origin for that, is this just their digital iron maiden to put players in? Seems like they’ve decided ActiBlizzard‘s douchebaggery made them look too good, and they had to start a pissing contest.

Another defense is that this allows for simplification and a more unified interface for the players. Again…isn’t this what Origin is for? Origin allows direct access to the EA store via what is basically…a browser window. Couldn’t this be rolled into Origin, even in the exact state it’s in now? Apparently not. As the rule goes, it can’t be too easy or logical…somewhere it has to get convoluted, just to screw with people.

And what happens when this website is shut down? Certainly they won’t keep it running indefinitely. Someday the userbase will decline to a point at which EA decides it’s not worth running the Battlelog anymore, and then…no more play. If all servers were centrally hosted by EA, this argument might be baseless, but they’re not. They’re hosted by whoever feels like hosting one, to avoid the issues Activision ran into with peer hosting in Modern Warfare 2. But this is pointless if one can’t find the servers to login to, and unless EA/DICE decides to patch the game to include a server browser that isn’t going to happen. I’m beginning to become convinced the developers and publishers out there want to destroy the PC platform for no reason other than sadism, or perhaps boredom.

Duke Nukem Forever was put up on a Steam sale last week, so I decided to take it up. I’ve put four hours or so into it by now, and I have to say, I’m not impressed.

Interestingly, the skybox isn’t a clear image. When looking around at normal magnification, it looks perfectly fine, but when you zoom in the view–even the basic “iron sights”-esque zoom–it’s quite obviously fuzzy, as if the developers didn’t intend (or want) the player to actually look at it. This is a bit puzzling, because it wouldn’t have taken much more to fully detail the image, even on the off-chance of being looked at.

The shadows in the game behave oddly, to say the least. When the focus point of the player’s view changes, it seems all shadows are redrawn, so that objects seem to glow. This has distracted me on more than one occasion with thinking that the “glowing” oject was my obective when it was just a minor lighting glitch. This even happens with some “permanent” objects, such as buildings and terrain, not just items that are sitting around. I can only surmise that this is also a result of the heavy modifications made to the game engine.

Probably the worst design decision the team made was to graft platform and puzzle elements into the game. In one stage, you come across a statue of Duke which must be used to reach the next floor of a building. The statue’s hitbox is small enough that one can very easily fall off the arms, making the task tedious and annoying. Later Duke must utilize a crane to continue his progress, and like most other puzzles in the game, the solution isn’t very clear. At least six times in the first six chapters, I have had to look up YouTube videos to solve these awkward puzzles, and each time I felt stupid for not noticing the solution, even though it was badly designed. Don’t get me wrong, these features can work in a shooter, but they don’t really fit into this game at all. Duke is all about fast and furious gunplay with weapons of absurd destruction. Breaking the momentum with tedious puzzles and framerate issues can kill the game, and in this case, probably does for many players.

What strikes me as particularly funny is that another game feels more like Duke than…well, Duke. BulletStorm is a game focused purely on gunplay, and capitalizes on this with skillshots that grant points for particularly interesting, unusual, or simply skillful kills of various flavors. These skillshots make the game far more enjoyable by encouraging creativity with kills, while having almost zero emphasis on plot or puzzle elements. I get the distinct feeling that BulletStorm is the game Duke Nukem Forever wanted to be. It’s certainly the one I enjoyed more.

Recently the gaming world was rocked by an earthquake of indescribable magnitude. That earthquake was the sound of one of the great titans falling–id Software, or more specifically that great god of gaming John Carmack.

For the past four years, id has been trumpeting the development process of their new engine, id Tech 5. This new engine would support enormous textures (as high as 128,000×128,000), live streaming of textures into the game world, automatic optimization of resources to make cross-platform development easier, and dozens of upgrades to increase the visual quality of games, such as multi-threading and volumetric lighting.

|

| Comparison: id tech 3, 4 and 5 |

But when the hammer hit the nail, some bad things happened. Immediately the PC version of the game suffered from horrendous texture pop-in; if the player shifted their focus of view for even a moment, high-resolution textures would be moved out of memory, thanks to the texture streaming aspect that the studio so staunchly stood behind. The result is a world of constantly smeared textures, which looks so badly like an overused highlighter that even Joystiq had to take a shot at them.

Compounding the problem, the game had virtually no settings the player could access. Literally. These are settings more or less like those found on console games, where there is no need to allow players to alter every aspect of the game’s performance. A patch now allows more settings to be accessed, but being one of the chief advantages of the PC platform, this is something that should have been there from the start (and is there, in virtually every other PC game released in the last fifteen years).

This is all baffling when taken in the context of who made the game: John Carmack, one of the foremost PC developers of all time, and probably the most ardent crusader for the platform in this day and age. At this year’s QuakeCon, he speaks of the differences between PC and console development and mentions (as he has many times before) that the limitations of consoles hold back PC game development because games essentially must be developed for the weakest platform, and can only be scaled up or adapted for the PC. From the way he constantly brings this up, it seems the logical solution is to abandon console development entirely and focus, with religious zeal, on the PC platform. But he insisted (or at least, someone insisted) on creating an engine and a game for all three platforms. The end result is…well, you can see for yourself.

Making things even worse, id has recently revealed that their test builds of the game ran on machines with drivers that had been customized. There are no words for how foolish a plan like this is. This would be like custom building a car engine that ran on a homemade concoction of fuel, and then complaining when that engine failed to run properly on the fuel that people actually sell at gas stations. AMD and nVidia, stunningly, have released driver updates that have greatly improved RAGE’s performance–but this arrangement is backward. Developers don’t dictate to hardware vendors what their drivers should do.

And now, as can be read in the above link, id is saying that the PC isn’t the “leading platform?”

A titan has truly fallen today. Let’s hope the PC pantheon can hold itself up.

I lack the necessary motivation and material to bitch about a particular piece of technology (though I may well have some fodder relating to a recent game), I’ll recap a few recent comics launches.

Moon Knight, now on its fifth issue, has been off to an interesting start. The titular star of the book, Marc Spector, is a wealthy businessman in Los Angeles who makes his money financing big-budget films and the like. In his spare time he uses his funds to fight crime as Moon Knight, along with his friends–Spider-Man, Captain America, and Wolverine–who assist him in some usual ways. Right off the bat, the Knight finds an interesting piece of technology in the hands of a local gang, which starts him on a cat-and-mouse quest that has yet to yield fruit. So far I’m enjoying it, and I look forward to more issues.

Moon Knight, now on its fifth issue, has been off to an interesting start. The titular star of the book, Marc Spector, is a wealthy businessman in Los Angeles who makes his money financing big-budget films and the like. In his spare time he uses his funds to fight crime as Moon Knight, along with his friends–Spider-Man, Captain America, and Wolverine–who assist him in some usual ways. Right off the bat, the Knight finds an interesting piece of technology in the hands of a local gang, which starts him on a cat-and-mouse quest that has yet to yield fruit. So far I’m enjoying it, and I look forward to more issues.

Rating: 7.5/10

Planet of the Apes #6 hit shelves this month, as well. Timed to coincide with the release of the recent film, the comic tells the story of oppressed humans living in a Hooverville of a town on the outskirts of Mak, an ape metropolis. After the lawgiver, the supreme authority of Mak, is killed by a human, the two species stand on the brink of war. The situation only gets worse when a cache of futuristic weaponry is discovered, some of which falls into the hands of the humans. It’s nice to see an original story that doesn’t attempt to rehash the Rise film, or Tim Burton’s now-forgotten piece. It will be interesting to see where this goes.

Planet of the Apes #6 hit shelves this month, as well. Timed to coincide with the release of the recent film, the comic tells the story of oppressed humans living in a Hooverville of a town on the outskirts of Mak, an ape metropolis. After the lawgiver, the supreme authority of Mak, is killed by a human, the two species stand on the brink of war. The situation only gets worse when a cache of futuristic weaponry is discovered, some of which falls into the hands of the humans. It’s nice to see an original story that doesn’t attempt to rehash the Rise film, or Tim Burton’s now-forgotten piece. It will be interesting to see where this goes.

Rating: 7.5/10

Two months ago, Teenage Mutant Ninja Turtles celebrated its relaunch. This being the fourth volume of the comic since its original debut in the mid-Eighties, times have changed and so has the story. The first issue begins some eighteen months after an accident in which the turtles and their master, Spliter, were exposed to toxic waste, at which time Raphael was separated from the group. Now Raph wanders the streets aimlessly, searching for a purpose, while Splinter directs the remaining three Turtles in the search for their lost brother. The art style gets some points for being a bit nonconformist, while not straying too far. I’m liking it.

Two months ago, Teenage Mutant Ninja Turtles celebrated its relaunch. This being the fourth volume of the comic since its original debut in the mid-Eighties, times have changed and so has the story. The first issue begins some eighteen months after an accident in which the turtles and their master, Spliter, were exposed to toxic waste, at which time Raphael was separated from the group. Now Raph wanders the streets aimlessly, searching for a purpose, while Splinter directs the remaining three Turtles in the search for their lost brother. The art style gets some points for being a bit nonconformist, while not straying too far. I’m liking it.

Rating: 8/10

DC Comics recently had the bright idea to “relauch” their entire line of comics, cancelling a large number of long-running series in the process and rebooting all continuity. Batman in particular took a backward step–after months of Dick Grayson running around as the Caped Crusader, DC decided to put Bruce Wayne back in the suit. While I’m not a fan of this decision (Grayson made a great Batman in my opinion), the first issue of this new run shows promise. Scott Snyder was the writer at the end of Detective Comics‘ run, and his skill gives me faith. I’m happy to see him working on this series now.

Rating: 8/10

Green Arrow is the one I am less than thrilled about. Almost every aspect of the character has been tinkered with, to the point of violating a lot of the basic qualities he used to have. While he was previously a Robin Hood-like hero stalking about the city, now he looks rather like Duke Nukem in a domino mask. He has also been given a supporting team, not unlike Batman’s former partnership with Oracle, which is unusual for a character who has always been a loner. The series has an action-oriented feel to it, rather than the dark brooding philosophy he used to have. I don’t think I will be follwing this one for long, but I’ll give it a few months.

Rating: 3/10