During this recent (and most impressive) string of PR failures on Microsoft’s part, there were all sorts of people coming out of the woodwork on both sides of the argument. Among these was one engineer at Microsoft, who released a statement on pastebin explaining and defending some of their less popular policies. But Microsoft’s policies aren’t what bothered me (their failure to competently expain their position was rather annoying, though); what got to me was a particular phrase in the text:

Microsoft is trying to balance between consumer delight, and publisher wishes. If we cave to far in either direction you have a non-starting product. WiiU goes too far to consumer, you have no 3rd party support to shake a stick at.

To me, this statement is indicative of the ongoing conflict between publishers and consumers, not just in the gaming industry, but virtually any market. Until about a generation ago, the gaming industry seemed to be more consumer-friendly than others. I shouldn’t be surprised that didn’t stick.

The phrase “too far to consumer” in particular irks me. There should be no such thing as “too far to consumer”. The consumers have the money. They decide what they want to buy. A publisher cannot demand I do business on their terms. They are asking for my business. If they lay down demands and prerequistes, they don’t get my business.

Consumers need to stand up and let the publishers know this. They need to stop laying down and saying, “oh well, that’s how it is, may as well get used to it.” I am far from a principled person, and I have caved to some things like Mass Effect 3 even though it meant having to put up with Origin. But other things, like Blu-Ray, I refuse to embrace, because of its oppressive DRM. But this thought that publishers need to wage war against consumers, and wrap it in a cloak of “cloud computing” and “all digital platform” half-truths is absurd. At least Steam was open and up-front about its strategy to become an exclusively digital distribution service. Rather than make the same claim, Microsoft was attempting to say that they would somehow be superior to Steam while levying restrictions that were more burdensome, with few advtanges.

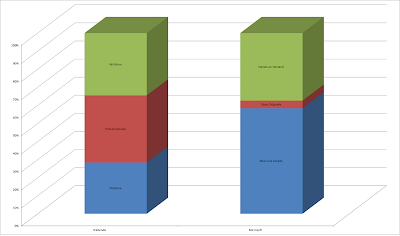

On a wider scale, publishers and developers have adpoted a “divide and conquer” strategy for their products over the past several years. This represents a more subtle, but equally effective, approach at belittling their consumer bases. Often it’s compounded with a psuedo-currency system designed to force people to buy in bulk and end up with leftover credits that can’t be spent anywhere else.

Publishers and distributors should be begging for customers’ business. They should be asking nicely for those crisp dollars, and if they want me to give them more dollars, they should be providing me with content that is worth those dollars. When a developer finishes work on a chunk of DLC they should be stopping and asking themselves, “is this worth the money we want them to pay for it?”

But the sad reality of economics is that things aren’t worth a value determined by the cost of the raw goods to produce them, or the man-hours spent, or the weight of the Brazilian royal family in 1871 divided by the rolling average of the number of car accidents in the developing world. They’re worth what people will pay for them. If people demonstrate they are willing to pay $90 for a used video game, publishers and retailers will charge them that. It’s up to the developers and publishers to put out content worth the price tag, but it’s up to consumers to stand up and say “no” when it’s not worth it.